Integrating AI paradigms to make sense of big data (and the world)

Main content

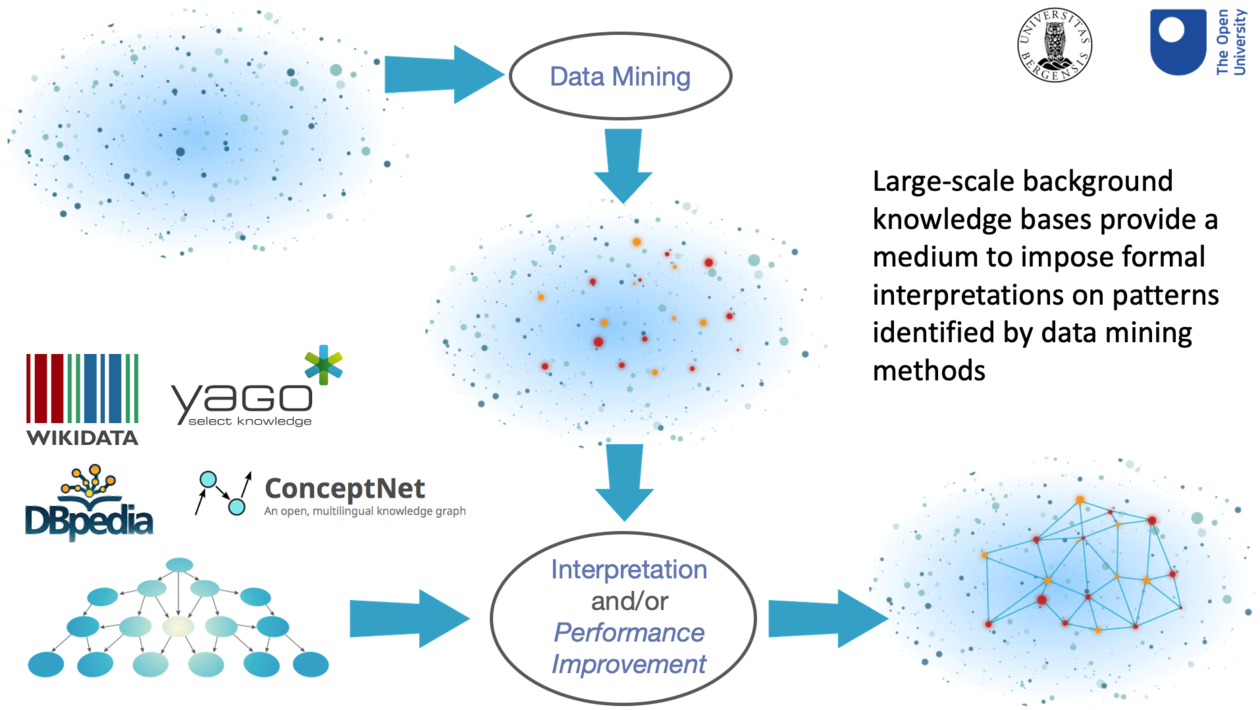

Abstract: Over the past 15-20 years we have witnessed a paradigm shift in Computer Science, brought about by the unprecedented availability of very large amounts of data. As a result of this paradigm shift, and also thanks to the emergence of novel machine learning techniques based on neural networks, new highly effective solutions have been produced, which allow us to model and predict the dynamic of a variety of phenomena, such as mobility patterns, consumer behaviour, financial markets, etc. However, while big data clearly requires AI technologies, the opposite is true as well. More specifically, in accordance with the knowledge representation hypothesis proposed by Brian Smith back in 1979, a key assumption of symbolic AI is that intelligent behaviour is predicated on the availability of large amounts of knowledge. That is, knowledge is a precondition for intelligence. Hence, in the past 40 years we have also seen the development of several large-scale knowledge bases, which aim to provide the kind of non-brittle resources needed to carry out complex tasks in the real world.

While the deep learning and knowledge-based paradigms in AI are often seen as antithetic, in reality their integration is essential, both to provide AI systems with better explanation capabilities and also to improve their performance.

In this talk I will illustrate our approach to combining these two paradigms, using examples from two application domains, robotics and scholarly analytics. In the former domain, I will present our work on visual intelligence in robots, emphasizing the crucial role played by background knowledge in improving a robot’s ability to make sense of its environment. In the context of the scholarly domain, I will discuss a number of examples of our work on making sense of the research landscape, including i) understanding the knowledge flow between academia and industry; ii) exploiting a geo-political analysis of Computer Science conferences to highlight a very static landscape in which new entries struggle to emerge; and iii) generating highly detailed maps of the space of research topics in a given field of science.

Finally, I will conclude my presentation by illustrating initial work with colleagues at UiB and the OU on modelling news angles, which aims to provide a robust epistemological basis on which to ground the development of novel solutions in computational journalism.

Prof Enrico Motta is currently a Professor of Knowledge Technologies at the Knowledge Media Institute (KMi) of the UK’s Open University and also holds a professorial appointment at the Department of Information Science and Media Studies of the University of Bergen in Norway. He has a Laurea (Master-level degree) in Computer Science from the University of Pisa in Italy and a PhD in Artificial Intelligence from The Open University.

About the speaker: Prof Motta is the Head of the Intelligent Systems and Data Science research group in KMi. His work spans a variety of research areas to do with data science, semantic and language technologies, intelligent systems and robotics, and human-computer interaction. He has authored over 350 refereed publications and his h-index is 66. Over the years, Prof. Motta has led KMi’s contribution to several national and international projects, receiving over £12M in external funding from a variety of institutional funding bodies, including EU, EPSRC, AHRC, the Arts Council, NERC, HEFCE, and Innovate UK. His research has also attracted funding from commercial organizations, including the top two international scientific publishers, Elsevier and Springer Nature. Since 2014 he has been collaborating with Milton Keynes Council on its ‘smart city’ agenda, first as Director of the £17M, award-winning MK:Smart project, and then on the CityLabs and MK:5G initiatives.

His current activities focus on the use of AI techniques in the academic publishing industry (collaboration with Springer Nature); the deployment of intelligent robots in healthcare and urban settings (collaboration with Samsung); the development of sophisticated data management infrastructures for smart cities (collaboration with MK Council), and the use of AI techniques in computational journalism (a collaboration between the OU and the University of Bergen).

Over the years Prof Motta has been a member of strategic research panels for both the EU and UKRI, and has also advised research institutions and governments in a number of countries, including US, China, The Netherlands, Austria, Finland, and Estonia.

He was also Editor in Chief of the International Journal of Human Computer Studies from 2004 to 2018 and in 2003 founded the ground-breaking European Summer School on Ontological Engineering and the Semantic Web (SSSW), which provided the main international postgraduate forum for learning about semantic technologies until its final edition in 2016.