Available Master's thesis topics in machine learning

Main content

Here we list topics that are available. You may also be interested in our list of completed Master's theses.

Learning and inference with large Bayesian networks

Most learning and inference tasks with Bayesian networks are NP-hard. Therefore, one often resorts to using different heuristics that do not give any quality guarantees.

Task: Evaluate quality of large-scale learning or inference algorithms empirically.

Advisor: Pekka Parviainen

Sum-product networks

Traditionally, probabilistic graphical models use a graph structure to represent dependencies and independencies between random variables. Sum-product networks are a relatively new type of a graphical model where the graphical structure models computations and not the relationships between variables. The benefit of this representation is that inference (computing conditional probabilities) can be done in linear time with respect to the size of the network.

Potential thesis topics in this area: a) Compare inference speed with sum-product networks and Bayesian networks. Characterize situations when one model is better than the other. b) Learning the sum-product networks is done using heuristic algorithms. What is the effect of approximation in practice?

Advisor: Pekka Parviainen

Bayesian Bayesian networks

The naming of Bayesian networks is somewhat misleading because there is nothing Bayesian in them per se; A Bayesian network is just a representation of a joint probability distribution. One can, of course, use a Bayesian network while doing Bayesian inference. One can also learn Bayesian networks in a Bayesian way. That is, instead of finding an optimal network one computes the posterior distribution over networks.

Task: Develop algorithms for Bayesian learning of Bayesian networks (e.g., MCMC, variational inference, EM)

Advisor: Pekka Parviainen

Large-scale (probabilistic) matrix factorization

The idea behind matrix factorization is to represent a large data matrix as a product of two or more smaller matrices.They are often used in, for example, dimensionality reduction and recommendation systems. Probabilistic matrix factorization methods can be used to quantify uncertainty in recommendations. However, large-scale (probabilistic) matrix factorization is computationally challenging.

Potential thesis topics in this area: a) Develop scalable methods for large-scale matrix factorization (non-probabilistic or probabilistic), b) Develop probabilistic methods for implicit feedback (e.g., recommmendation engine when there are no rankings but only knowledge whether a customer has bought an item)

Advisor: Pekka Parviainen

Bayesian deep learning

Standard deep neural networks do not quantify uncertainty in predictions. On the other hand, Bayesian methods provide a principled way to handle uncertainty. Combining these approaches leads to Bayesian neural networks. The challenge is that Bayesian neural networks can be cumbersome to use and difficult to learn.

The task is to analyze Bayesian neural networks and different inference algorithms in some simple setting.

Advisor: Pekka Parviainen

Deep learning for combinatorial problems

Deep learning is usually applied in regression or classification problems. However, there has been some recent work on using deep learning to develop heuristics for combinatorial optimization problems; see, e.g., [1] and [2].

Task: Choose a combinatorial problem (or several related problems) and develop deep learning methods to solve them.

References: [1] Vinyals, Fortunato and Jaitly: Pointer networks. NIPS 2015. [2] Dai, Khalil, Zhang, Dilkina and Song: Learning Combinatorial Optimization Algorithms over Graphs. NIPS 2017.

Advisors: Pekka Parviainen, Ahmad Hemmati

Estimating the number of modes of an unknown function

Mode seeking considers estimating the number of local maxima of a function f. Sometimes one can find modes by, e.g., looking for points where the derivative of the function is zero. However, often the function is unknown and we have only access to some (possibly noisy) values of the function.

In topological data analysis, we can analyze topological structures using persistent homologies. For 1-dimensional signals, this can translate into looking at the birth/death persistence diagram, i.e. the birth and death of connected topological components as we expand the space around each point where we have observed our function. These observations turn out to be closely related to the modes (local maxima) of the function. A recent paper [1] proposed an efficient method for mode seeking.

In this project, the task is to extend the ideas from [1] to get a probabilistic estimate on the number of modes. To this end, one has to use probabilistic methods such as Gaussian processes.

[1] U. Bauer, A. Munk, H. Sieling, and M. Wardetzky. Persistence barcodes versus Kolmogorov signatures: Detecting modes of one-dimensional signals. Foundations of computational mathematics17:1 - 33, 2017.

Advisors: Pekka Parviainen, Nello Blaser

Causal Abstraction Learning

We naturally make sense of the world around us by working out causal relationships between objects and by representing in our minds these objects with different degrees of approximation and detail. Both processes are essential to our understanding of reality, and likely to be fundamental for developing artificial intelligence. The first process may be expressed using the formalism of structural causal models, while the second can be grounded in the theory of causal abstraction [1].

This project will consider the problem of learning an abstraction between two given structural causal models. The primary goal will be the development of efficient algorithms able to learn a meaningful abstraction between the given causal models.

[1] Rubenstein, Paul K., et al. "Causal consistency of structural equation models." arXiv preprint arXiv:1707.00819 (2017).

Advisor: Fabio Massimo Zennaro

Causal Bandits

"Multi-armed bandit" is an informal name for slot machines, and the formal name of a large class of problems where an agent has to choose an action among a range of possibilities without knowing the ensuing rewards. Multi-armed bandit problems are one of the most essential reinforcement learning problems where an agent is directly faced with an exploitation-exploration trade-off.

This project will consider a class of multi-armed bandits where an agent, upon taking an action, interacts with a causal system [1]. The primary goal will be the development of learning strategies that takes advantage of the underlying causal system in order to learn optimal policies in a shortest amount of time.

[1] Lattimore, Finnian, Tor Lattimore, and Mark D. Reid. "Causal bandits: Learning good interventions via causal inference." Advances in neural information processing systems 29 (2016).

Advisor: Fabio Massimo Zennaro

Reinforcement Learning for Computer Security

The field of computer security presents a wide variety of challenging problems for artificial intelligence and autonomous agents. Guaranteeing the security of a system against attacks and penetrations by malicious hackers has always been a central concern of this field, and machine learning could now offer a substantial contribution. Security capture-the-flag simulations are particularly well-suited as a testbed for the application and development of reinforcement learning algorithms [1].

This project will consider the use of reinforcement learning for the preventive purpose of testing systems and discovering vulnerabilities before they can be exploited. The primary goal will be the modelling of capture-the-flag challenges of interest and the development of reinforcement learning algorithms that can solve them.

[1] Erdodi, Laszlo, and Fabio Massimo Zennaro. "The Agent Web Model--Modelling web hacking for reinforcement learning." arXiv preprint arXiv:2009.11274 (2020).

Advisor: Fabio Massimo Zennaro, Laszlo Tibor Erdodi

Approaches to AI Safety

The world and the Internet are more and more populated by artificial autonomous agents carrying out tasks on our behalf. Many of these agents are provided with an objective and they learn their behaviour trying to achieve their objective as better as they can. However, this approach can not guarantee that an agent, while learning its behaviour, will not undertake actions that may have unforeseen and undesirable effects. Research in AI safety tries to design autonomous agent that will behave in a predictable and safe way [1].

This project will consider specific problems and novel solution in the domain of AI safety and reinforcement learning. The primary goal will be the development of innovative algorithms and their implementation withing established frameworks.

[1] Amodei, Dario, et al. "Concrete problems in AI safety." arXiv preprint arXiv:1606.06565 (2016).

Advisor: Fabio Massimo Zennaro

Reinforcement Learning for Super-modelling

Super-modelling [1] is a technique designed for combining together complex dynamical models: pre-trained models are aggregated with messages and information being exchanged in order synchronize the behavior of the different modles and produce more accurate and reliable predictions. Super-models are used, for instance, in weather or climate science, where pre-existing models are ensembled together and their states dynamically aggregated to generate more realistic simulations.

This project will consider how reinforcement learning algorithms may be used to solve the coordination problem among the individual models forming a super-model. The primary goal will be the formulation of the super-modelling problem within the reinforcement learning framework and the study of custom RL algorithms to improve the overall performance of super-models.

[1] Schevenhoven, Francine, et al. "Supermodeling: improving predictions with an ensemble of interacting models." Bulletin of the American Meteorological Society 104.9 (2023): E1670-E1686.

Advisor: Fabio Massimo Zennaro, Francine Janneke Schevenhoven

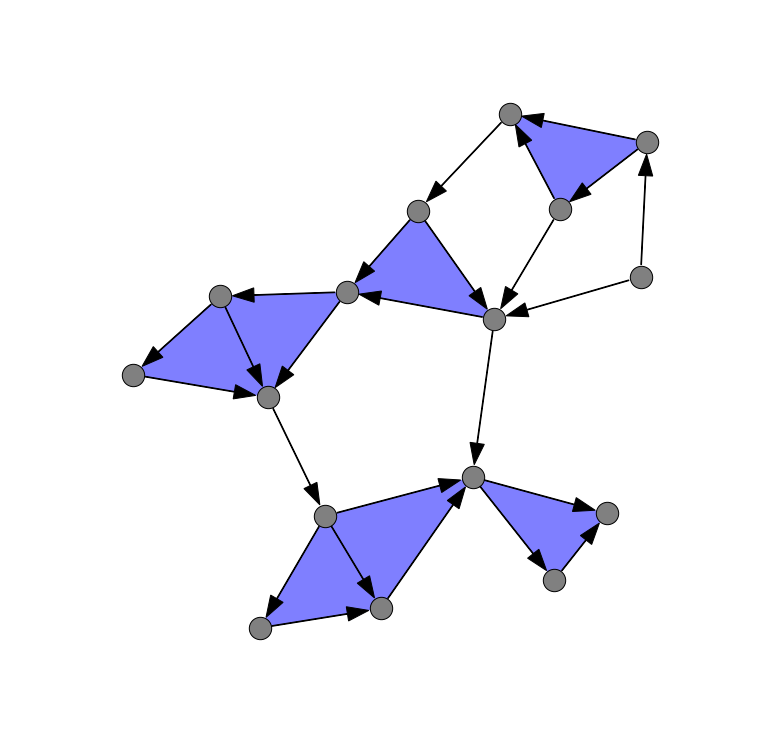

Multilevel Causal Discovery

Modelling causal relationships between variables of interest is a crucial step in understanding and controlling a system. A common approach is to represent such relations using graphs with directed arrows discriminating causes from effects.

While causal graphs are often built relying on expert knowledge, a more interesting challenge is to learn them from data. In particular, we want to consider the case where data might have been collected at multiple levels, for instance, with sensor with different resolutions. In this project we want to explore how these heterogeneous data can help the process of inferring causal structures.

[1] Anand, Tara V., et al. "Effect identification in cluster causal diagrams." Proceedings of the 37th AAAI Conference on Artificial Intelligence. Vol. 82. 2023.

Advisor: Fabio Massimo Zennaro, Pekka Parviainen

Manifolds of Causal Models

Modelling causal relationships is fundamental in order to understand real-world systems. A common formalism is offered by structural causal models (SCMs) which represent these relationships graphical. However, SCMs are complex mathematical objects entailing collections of different probability distributions.

In this project we want to explore a differential geometric perspective on structural causal models [1]. We will model an SCM and the probability distributions it generates in terms of manifold, and we will study how this modelling encodes causal properties of interest and how relevant quantities may be computed in this framework.

[1] Dominguez-Olmedo, Ricardo, et al. "On data manifolds entailed by structural causal models." International Conference on Machine Learning. PMLR, 2023.

Advisor: Fabio Massimo Zennaro, Nello Blaser

Topological Data Analysis on Simulations

Complex systems and dynamics may be hard to formalize in a closed form, and they can often be better studied through simulations. Social systems, for instance, may be reproduced by instantiating simple agents whose interactions generate complex and emergent dynamics. Still, analyzing the behaviours arising from these interactions is not trivial.

In this project we will consider the use of topological data analysis for categorizing and understanding the behaviour of agents in agent-based models [1]. We will analyze the insights and the limitations of exisiting algorithms, as well as consider what dynamical information may be glimpsed through such an analysis.

[1] Swarup, Samarth, and Reza Rezazadegan. "Constructing an Agent Taxonomy from a Simulation Through Topological Data Analysis." Multi-Agent-Based Simulation XX: 20th International Workshop, MABS 2019, Montreal, QC, Canada, May 13, 2019, Revised Selected Papers 20. Springer International Publishing, 2020.

Advisor: Fabio Massimo Zennaro, Nello Blaser

Abstraction for Epistemic Logic

Weighted Kripke models constitute a powerful formalism to express the evolving knowledge of an agent; it allows to express known facts and beliefs, and to recursively model the knowledge of an agent about another agent. Moreover, such relations of knowledge can be given a graphical expression using suitable diagrams on which to perform reasoning. Unfortunately, such graphs can quickly become very large and inefficient to process.

This project consider the reduction of epistemic logic graph using ideas from causal abstraction [1]. The primary goal will be the development of ML models that can learn to output small epistemic logic graph still satisfying logical and consistency constraints.

[1] Zennaro, Fabio Massimo, et al. "Jointly learning consistent causal abstractions over multiple interventional distributions." Conference on Causal Learning and Reasoning. PMLR, 2023

Advisor: Fabio Massimo Zennaro, Rustam Galimullin

Finalistic Models

The behavior of an agent may be explained both in causal terms (what has caused a certain behavior) or in finalistic terms (what aim justifies a certain behaviour). While causal reasoning is well explained by different mathematical formalism (e.g., structural causal models), finalistic reasoning is still object of research.

In this project we want to explore how a recently-proposed framework for finalistic reasoning [1] may be used to model intentions and counterfactuals in a causal bandit setting, or how it could be used to enhance inverse reinforcement learning.

[1] Compagno, Dario. "Final models: A finalistic interpretation of statistical correlation." arXiv preprint arXiv:2310.02272 (2023).

Advisor: Fabio Massimo Zennaro, Dario Compagno

Automatic hyperparameter selection for isomap

Isomap is a non-linear dimensionality reduction method with two free hyperparameters (number of nearest neighbors and neighborhood radius). Different hyperparameters result in dramatically different embeddings. Previous methods for selecting hyperparameters focused on choosing one optimal hyperparameter. In this project, you will explore the use of persistent homology to find parameter ranges that result in stable embeddings. The project has theoretic and computational aspects.

Advisor: Nello Blaser

Topological Ancombs quartet

This topic is based on the classical Ancombs quartet and families of point sets with identical 1D persistence (https://arxiv.org/abs/2202.00577). The goal is to generate more interesting datasets using the simulated annealing methods presented in (http://library.usc.edu.ph/ACM/CHI%202017/1proc/p1290.pdf). This project is mostly computational.

Advisor: Nello Blaser

Persistent homology vectorization with cycle location

There are many methods of vectorizing persistence diagrams, such as persistence landscapes, persistence images, PersLay and statistical summaries. Recently we have designed algorithms to in some cases efficiently detect the location of persistence cycles. In this project, you will vectorize not just the persistence diagram, but additional information such as the location of these cycles. This project is mostly computational with some theoretic aspects.

Advisor: Nello Blaser

Divisive covers

Divisive covers are a divisive technique for generating filtered simplicial complexes. They original used a naive way of dividing data into a cover. In this project, you will explore different methods of dividing space, based on principle component analysis, support vector machines and k-means clustering. In addition, you will explore methods of using divisive covers for classification. This project will be mostly computational.

Advisor: Nello Blaser

Learning Acquisition Functions for Cost-aware Bayesian Optimization

This is a follow-up project of an earlier Master thesis that developed a novel method for learning Acquisition Functions in Bayesian Optimization through the use of Reinforcement Learning. The goal of this project is to further generalize this method (more general input, learned cost-functions) and apply it to hyperparameter optimization for neural networks.

Advisors: Nello Blaser, Audun Ljone Henriksen

Stable updates

This is a follow-up project of an earlier Master thesis that introduced and studied empirical stability in the context of tree-based models. The goal of this project is to develop stable update methods for deep learning models. You will design sevaral stable methods and empirically compare them (in terms of loss and stability) with a baseline and with one another.

Advisors: Morten Blørstad, Nello Blaser

Multimodality in Bayesian neural network ensembles

One method to assess uncertainty in neural network predictions is to use dropout or noise generators at prediction time and run every prediction many times. This leads to a distribution of predictions. Informatively summarizing such probability distributions is a non-trivial task and the commonly used means and standard deviations result in the loss of crucial information, especially in the case of multimodal distributions with distinct likely outcomes. In this project, you will analyze such multimodal distributions with mixture models and develop ways to exploit such multimodality to improve training. This project can have theoretical, computational and applied aspects.

Advisor: Nello Blaser

Optimizing Jet Reconstruction with Quantum-Based Clustering Techniques

QCD jets are collimated sprays of energy and particles frequently observed at collider experiments, signaling the occurrence of high-energy processes. These jets are pivotal for understanding quantum chromodynamics at high energies and for exploring physics beyond the Standard Model. The definition of a jet typically arises from an agreement between experimentalists and theorists, formalized in jet algorithms that help make sense of the large number of particles produced in collisions.

This project focuses on jet reconstruction using data-driven clustering techniques. Specifically, we aim to apply fast clustering algorithms, optimized through quantum methods, to identify the optimal distribution of jets on an event-by-event basis. This approach allows us to refine jet definitions and enhance the accuracy of jet reconstruction. Key objectives include:

- Introduce a purely data-drive clustering process using standard techniques.

- Optimizing the clustering process using quantum-inspired techniques.

- Benchmark the performance of these algorithms against existing frameworks and compare the extracted jet populations.

By focusing on clustering methods and quantum optimization, this project aims to provide a novel perspective on jet reconstruction, improving the precision and reliability of high-energy physics analyses.

Advisors: Nello Blaser, Konrad Tywoniuk

Online learning in real-time systems

Build a model for the drilling process by using the Virtual simulator OpenLab (https://openlab.app/) for real-time data generation and online learning techniques. The student will also do a short survey of existing online learning techniques and learn how to cope with errors and delays in the data.

Advisor: Rodica Mihai

Building a finite state automaton for the drilling process by using queries and counterexamples

Datasets will be generated by using the Virtual simulator OpenLab (https://openlab.app/). The student will study the datasets and decide upon a good setting to extract a finite state automaton for the drilling process. The student will also do a short survey of existing techniques for extracting finite state automata from process data. We present a novel algorithm that uses exact learning and abstraction to extract a deterministic finite automaton describing the state dynamics of a given trained RNN. We do this using Angluin's L*algorithm as a learner and the trained RNN as an oracle. Our technique efficiently extracts accurate automata from trained RNNs, even when the state vectors are large and require fine differentiation.arxiv.org

Advisor: Rodica Mihai

Graph Neural Network for Weather Forecasting in Ocean Areas and Sensor Placement Optimization

Despite significant advancements in weather and climate forecasting over the past half-century, accurately predicting weather in maritime areas for vessels remains challenging. State-of-the-art data-driven approaches, such as Graph Neural Networks (GNNs), have recently shown success in similar forecasting tasks.

This project aims to develop a deep understanding of how effectively GNNs improve local weather forecasting in maritime areas compared to existing methods, both in terms of results and computational efficiency. It also seeks to optimize the number and placement of weather sensors in the ocean and understand the relationship between the distance of weather stations from a ship and the forecast time horizon.

Students will become familiar with different GNNs and utilize them with real data. They will work with various ML methods for time-series analysis and gain knowledge of different weather forecasting methods.

Advisor: Samaneh Abolpour Mofrad

Scaling Laws for Language Models in Generative AI

Large Language Models (LLM) power today's most prominent language technologies in Generative AI like ChatGPT, which, in turn, are changing the way that people access information and solve tasks of many kinds.

A recent interest on scaling laws for LLMs has shown trends on understanding how well they perform in terms of factors like the how much training data is used, how powerful the models are, or how much computational cost is allocated. (See, for example, Kaplan et al. - "Scaling Laws for Neural Language Models”, 2020.)

In this project, the task will consider to study scaling laws for different language models and with respect with one or multiple modeling factors.

Advisor: Dario Garigliotti

Applications of causal inference methods to omics data

Many hard problems in machine learning are directly linked to causality [1]. The graphical causal inference framework developed by Judea Pearl can be traced back to pioneering work by Sewall Wright on path analysis in genetics and has inspired research in artificial intelligence (AI) [1].

The Michoel group has developed the open-source tool Findr [2] which provides efficient implementations of mediation and instrumental variable methods for applications to large sets of omics data (genomics, transcriptomics, etc.). Findr works well on a recent data set for yeast [3].

We encourage students to explore promising connections between the fiels of causal inference and machine learning. Feel free to contact us to discuss projects related to causal inference. Possible topics include: a) improving methods based on structural causal models, b) evaluating causal inference methods on data for model organisms, c) comparing methods based on causal models and neural network approaches.

References:

1. Schölkopf B, Causality for Machine Learning, arXiv (2019): https://arxiv.org/abs/1911.10500

2. Wang L and Michoel T. Efficient and accurate causal inference with hidden confounders from genome-transcriptome variation data. PLoS Computational Biology 13:e1005703 (2017). https://journals.plos.org/ploscompbiol/article?id=10.1371/journal.pcbi.1005703

3. Ludl A and and Michoel T. Comparison between instrumental variable and mediation-based methods for reconstructing causal gene networks in yeast. arXiv:2010.07417 https://arxiv.org/abs/2010.07417

Advisors: Adriaan Ludl, Tom Michoel

Space-Time Linkage of Fish Distribution to Environmental Conditions

Background

Conditions in the marine environment, such as, temperature and currents, influence the spatial distribution and migration patterns of marine species. Hence, understanding the link between environmental factors and fish behavior is crucial in predicting, e.g., how fish populations may respond to climate change. Deriving this link is challenging because it requires analysis of two types of datasets (i) large environmental (currents, temperature) datasets that vary in space and time, and (ii) sparse and sporadic spatial observations of fish populations.

Project goal

The primary goal of the project is to develop a methodology that helps predict how spatial distribution of two fish stocks (capelin and mackerel) change in response to variability in the physical marine environment (ocean currents and temperature). The information can also be used to optimize data collection by minimizing time spent in spatial sampling of the populations.

Approach

The project will focus on the use of machine learning and/or causal inference algorithms. As a first step, we use synthetic (fish and environmental) data from analytic models that couple the two data sources. Because the ‘truth’ is known, we can judge the efficiency and error margins of the methodologies. We then apply the methodologies to real world (empirical) observations.

Advisors: Tom Michoel, Sam Subbey.

Towards precision medicine for cancer patient stratification

On average, a drug or a treatment is effective in only about half of patients who take it. This means patients need to try several until they find one that is effective at the cost of side effects associated with every treatment. The ultimate goal of precision medicine is to provide a treatment best suited for every individual. Sequencing technologies have now made genomics data available in abundance to be used towards this goal.

In this project we will specifically focus on cancer. Most cancer patients get a particular treatment based on the cancer type and the stage, though different individuals will react differently to a treatment. It is now well established that genetic mutations cause cancer growth and spreading and importantly, these mutations are different in individual patients. The aim of this project is use genomic data allow to better stratification of cancer patients, to predict the treatment most likely to work. Specifically, the project will use machine learning approach to integrate genomic data and build a classifier for stratification of cancer patients.

Advisor: Anagha Joshi

Unraveling gene regulation from single cell data

Multi-cellularity is achieved by precise control of gene expression during development and differentiation and aberrations of this process leads to disease. A key regulatory process in gene regulation is at the transcriptional level where epigenetic and transcriptional regulators control the spatial and temporal expression of the target genes in response to environmental, developmental, and physiological cues obtained from a signalling cascade. The rapid advances in sequencing technology has now made it feasible to study this process by understanding the genomewide patterns of diverse epigenetic and transcription factors as well as at a single cell level.

Single cell RNA sequencing is highly important, particularly in cancer as it allows exploration of heterogenous tumor sample, obstructing therapeutic targeting which leads to poor survival. Despite huge clinical relevance and potential, analysis of single cell RNA-seq data is challenging. In this project, we will develop strategies to infer gene regulatory networks using network inference approaches (both supervised and un-supervised). It will be primarily tested on the single cell datasets in the context of cancer.

Advisor: Anagha Joshi

Developing a Stress Granule Classifier

To carry out the multitude of functions 'expected' from a human cell, the cell employs a strategy of division of labour, whereby sub-cellular organelles carry out distinct functions. Thus we traditionally understand organelles as distinct units defined both functionally and physically with a distinct shape and size range. More recently a new class of organelles have been discovered that are assembled and dissolved on demand and are composed of liquid droplets or 'granules'. Granules show many properties characteristic of liquids, such as flow and wetting, but they can also assume many shapes and indeed also fluctuate in shape. One such liquid organelle is a stress granule (SG).

Stress granules are pro-survival organelles that assemble in response to cellular stress and important in cancer and neurodegenerative diseases like Alzheimer's. They are liquid or gel-like and can assume varying sizes and shapes depending on their cellular composition.

In a given experiment we are able to image the entire cell over a time series of 1000 frames; from which we extract a rough estimation of the size and shape of each granule. Our current method is susceptible to noise and a granule may be falsely rejected if the boundary is drawn poorly in a small majority of frames. Ideally, we would also like to identify potentially interesting features, such as voids, in the accepted granules.

We are interested in applying a machine learning approach to develop a descriptor for a 'classic' granule and furthermore classify them into different functional groups based on disease status of the cell. This method would be applied across thousands of granules imaged from control and disease cells. We are a multi-disciplinary group consisting of biologists, computational scientists and physicists.

Advisors: Sushma Grellscheid, Carl Jones

Machine Learning based Hyperheuristic algorithm

Develop a Machine Learning based Hyper-heuristic algorithm to solve a pickup and delivery problem. A hyper-heuristic is a heuristics that choose heuristics automatically. Hyper-heuristic seeks to automate the process of selecting, combining, generating or adapting several simpler heuristics to efficiently solve computational search problems [Handbook of Metaheuristics]. There might be multiple heuristics for solving a problem. Heuristics have their own strength and weakness. In this project, we want to use machine-learning techniques to learn the strength and weakness of each heuristic while we are using them in an iterative search for finding high quality solutions and then use them intelligently for the rest of the search. Once a new information is gathered during the search the hyper-heuristic algorithm automatically adjusts the heuristics.

Advisor: Ahmad Hemmati

Early Prediction of Abnormal Human Behaviours in Surveillance Using Deep Learning

Predicting human behaviour from partial observations is a crucial task in fields like surveillance, healthcare, human-robot interaction, and autonomous systems. In security-sensitive environments such as airports, train stations, and banks, predicting abnormal actions before they occur is essential for preventing potential threats.

While action recognition techniques focus on identifying actions after they are performed, action prediction aims to forecast future behaviours. This challenge requires understanding the current state of actions and predicting their evolution. Despite significant progress in deep learning for action recognition and prediction, limited attention has been given to predicting abnormal human behaviour in surveillance.

The research aims to develop a deep learning-based model to predict abnormal human behaviour in real-time surveillance settings using incomplete observations. The goal is to provide early detection of potentially dangerous actions, enabling pre-emptive measures.

Advisor: Allah Bux

Sign Language Recognition and Analysis Using Deep Learning

Sign languages are natural languages used by communities of deaf and hearing signers worldwide. Sign languages use hand movements and nonmanual articulators like the head, body, mouth, eyes, and eyebrows to convey complex linguistic information. These nonmanual elements, collectively known as "nonmanual," are critical for expressing meaning, grammar, and prosody in sign language.

This study aims to develop a machine learning-based model for detecting and tracking nonmanual movements in video datasets.

Advisor: Vadim Kimmelman, Allah Bux

Multimodal Emotion Recognition for Healthcare and Mental Health Monitoring

Mental health disorders, such as depression, anxiety, and stress, affect millions worldwide, often remaining undiagnosed until they reach critical stages. Early detection and continuous monitoring of emotional states can greatly enhance therapeutic interventions and patient outcomes. Multimodal Emotion Recognition (MER) offers a powerful tool for real-time monitoring of emotional cues through speech, facial expressions, and textual content. By integrating these modalities, MER can provide a more accurate and comprehensive assessment of a patient's emotional and mental state, supporting healthcare professionals in diagnosis and treatment.

This study aims to explore the application of MER in mental health diagnostics and therapy, where emotional cues from patients can be automatically analysed to aid in the early detection of mental health disorders using deep learning-based techniques.

Advisor: Allah Bux

Image calibration in images for size estimation and density measures of marine species

The Mareano Project at the Institute of Marine Research has been tasked with studying vulnerable, yet environmentally important seabed communities in Norwegian waters since 2006. A large component of this involves collecting video of the seafloor and identifying, quantifying and localizing the marine life within. However, image annotation is a labour-intensive task that introduces inconsistencies and human error. The following projects aim to develop automated tools to improve the extraction and quality of marine ecological data from imagery.

Estimating the size of marine species is important for numerous reasons such as measuring ecosystem health, biodiversity and recovery to support conservation and management. In underwater image surveys, accurate calculation of image area is crucial to this and complicated by the variation in altitude and angle of camera platforms in relation to the seabed. Lasers, fixed at a set distance, are required for scaling. However, the pixel distance between lasers is measured manually by analysts and the visibility of the lasers varies.

This project will look to develop a pipeline that will first detect lasers in imagery and highlight if lasers are missing or appearing inconsistently. The image area can then be estimated, accounting for angle of the seabed. With a known image area, object detection/segmentation methods will be explored to automatically estimate the size/density of marine species. Other emerging methods for image calibration can be explored to improve practical applicability, speed and efficiency.

Advisors: Chloe Game (UIB) and Nils Piechaud (Institute of Marine Research)

Tracking of marine species in video for optimal frame extraction

The Mareano Project at the Institute of Marine Research has been tasked with studying vulnerable, yet environmentally important seabed communities in Norwegian waters since 2006. A large component of this involves collecting video of the seafloor and identifying, quantifying and localizing the marine life within. However, image annotation is a labour-intensive task that introduces inconsistencies and human error. The following projects aim to develop automated tools to improve the extraction and quality of marine ecological data from imagery.

Monitoring of the deep sea and species living on the seabed requires ecologists to manually extract representative and high-quality frames from video for their analysis. This can be a challenging process given the technical difficulty with imaging in these environments. Images suffer from effects such as blurring, low contrast, non-uniform illumination, colour saturation and loss and thus the appearance of species is highly variable. This is exacerbated further by the uneven movement of the imaging platform in 3D space.

This project will look to develop a pipeline to automatically detect and track seabed species over multiple video frames improving the manual video annotation procedure. This will be used to design a tool to prepare image datasets from video in a standardized, representative way that avoids duplication and seeks high quality imagery of marine species of interest that can be used for further ecological analysis and generating training datasets for machine learning tasks.

Advisors: Chloe Game (UIB) and Nils Piechaud (Institute of Marine Research)

Machine learning for solving satisfiability problems and applications in cryptanalysis

Advisor: Igor Semaev

Explainable AI at Equinor

In the project Machine Teaching for XAI (see https://xai.w.uib.no) a master thesis in collaboration between UiB and Equinor.

Advisor: One of Pekka Parviainen/Jan Arne Telle/Emmanuel Arrighi + Bjarte Johansen from Equinor.

Explainable AI at Eviny

In the project Machine Teaching for XAI (see https://xai.w.uib.no) a master thesis in collaboration between UiB and Eviny.

Advisor: One of Pekka Parviainen/Jan Arne Telle/Emmanuel Arrighi + Kristian Flikka from Eviny.

Own topic

If you want to suggest your own topic, please contact Pekka Parviainen, Fabio Massimo Zennaro or Nello Blaser.